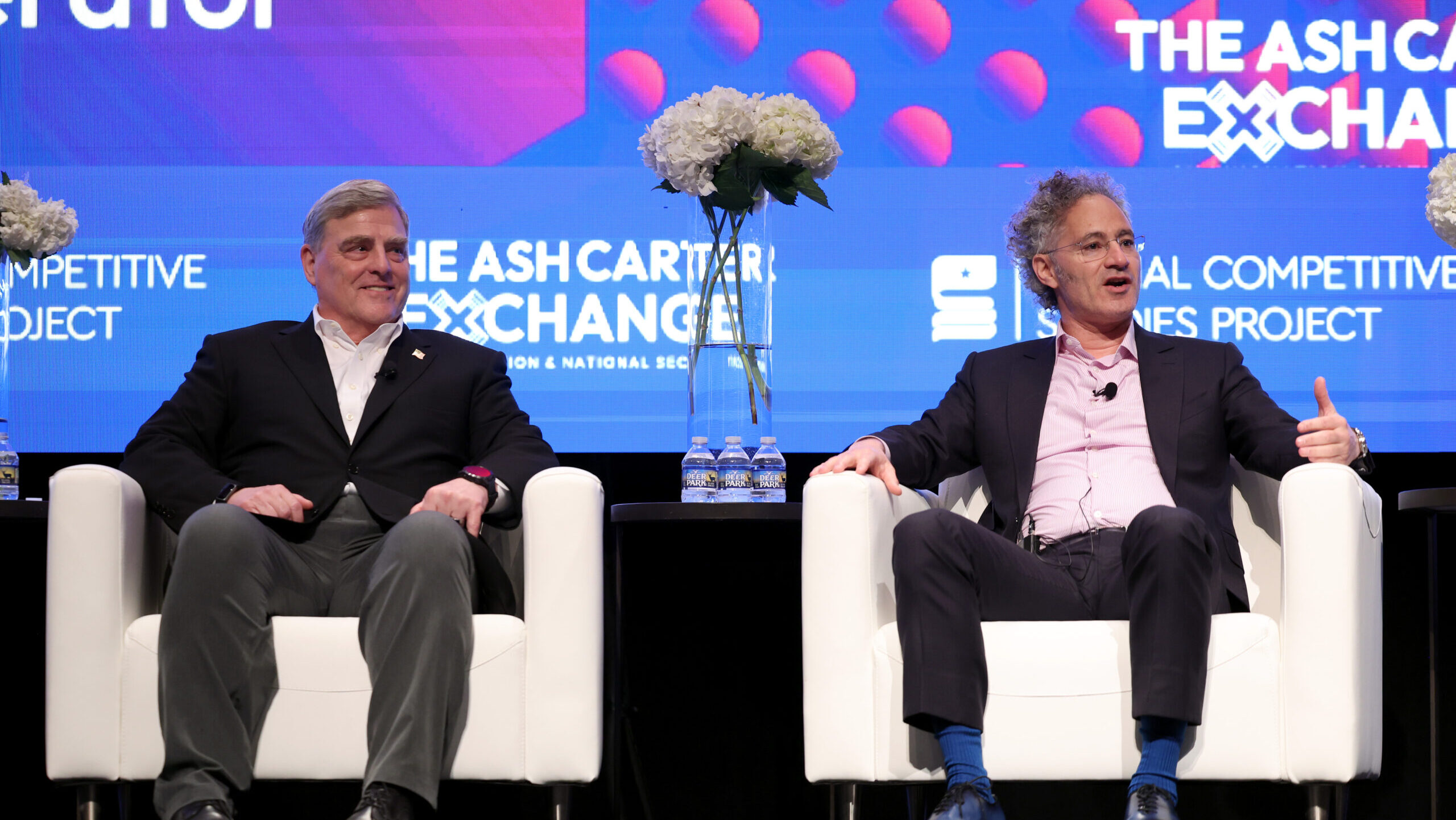

WASHINGTON, DC – MAY 07: (L-R) General Mark A. Milley, and Alex Karp speak for The Ash Carter Exchange on Innovation and National Security panel during the AI Expo For National Competitiveness at Walter E. Washington Convention Center on May 07, 2024 in Washington, DC. (Photo by Tasos Katopodis/Getty Images for Palantir)

WASHINGTON — With Hamas’s health ministry claiming almost 35,000 dead in Gaza and a potential ceasefire much in doubt, what’s euphemistically called “collateral damage” was much in mind Tuesday morning on the main stage of the second annual Ash Carter Exchange, a conference in memoriam of one of the Pentagon’s leading technophiles.

Two speakers — the fiery founder-CEO of Palantir, Alex Karp, and the blunt former Chairman of the Joint Chiefs, retired Gen. Mark Milley — were outspoken in their support for the Israeli campaign. But the two men diverged over whether more discriminating tech might make future wars less brutal.

Karp, a tech entrepreneur, was characteristically bullish on technology, while also taking the opportunity to rail against “pagan” and “cancerous” critics he said are holding Israel to “an impossible standard” the US could and should not meet itself. While not as outspoken, former Google CEO Eric Schmidt, whose SCSP think tank hosted the conference, and the Deputy Director of the CIA, David Cohen, generally agreed that technology, including artificial intelligence, could make war more precise and less brutal.

But Milley, the only combat veteran on the morning panel, spoke darkly about urban battles yet to come.

The kind of devastation seen in Gaza, Milley argued, is a tragic inevitability of war — in any era, no matter how marvelous the technology. Asked to identify the most dangerous misconception the American public might have, he responded: “The idea that war is antiseptic and there’s wonder weapons out there, that we can somehow make it painless… to think that technology’s going to resolve the horrors of war. It’s not.”

All four men acknowledged the potential for precision. With Milley alone carrying three online devices — smartwatch, iPhone, and Fitbit — the former four-star joked a sophisticated adversary could figure out which panelist was sitting in which chair and pick them off at will. “They know I’m sitting right next to Alex and I’m sitting right next to David,” Milley said. “If someone wanted to, they could then hit with precision at range and they could probably take out Cohen before me — and I wouldn’t be hurt.”

But just because such surgical precision is possible, that doesn’t necessarily make it probable. In Gaza, the Israeli Defense Force has made extensive use of AI targeting systems with codenames like “Gospel,” “Lavender,” and “Where’s Daddy,” using cell phones, social media, and other surveillance data to track, for instance, when suspected Hamas operatives returned home to their families at night. But skeptics within the IDF itself told The Guardian and the Israeli-Palestinian 972 Magazine in April that, under intense pressure to retaliate for 1,200 Israeli deaths, commanders set the algorithms’ criteria for a legitimate target so broadly, the threshold for acceptable civilian casualties so high, and the time for human doublechecking so short, that AI ended up enabling indiscriminate bombing of civilian homes.

Even the most restrained military, however, would struggle to avoid collateral damage in dense urban areas, and with over two million people crammed into under 140 square miles, the population density of the Gaza Strip is about the same as London’s. And in Milley’s grim forecast for the future, such city fighting will become the norm as the global population grows not only larger — to a UN-projected 9.7 billion by 2050 — but more urban, from roughly 50-50 urban-rural today to two-thirds urban by mid-century.

War “will occur where the people are,” said Milley. “It’s a horrible, brutal, vicious thing…Unfortunately, because [future] war is going to be in dense urban areas, the very conduct of war is going to have very high levels of collateral damage.”

Hope for a less brutal future came, uncharacteristically, from the CIA representative on the panel. “We have seen some of this future in Ukraine…where autonomous but well-designed technology that is incorporating the latest in software developments and some AI is able to quite precisely identify targets and hit those targets,” said Cohen. “It hasn’t been in an urban environment [that] Ukraine has been using this technology, it’s been mostly out in the field…but the trend is all towards precision.”

“I think there is a decent prospect that we will be in a world where some of what Gen. Milley is talking about, [where] the mass casualty events that have been a feature of wars of the past, will not be a feature of wars of the future,” Cohen concluded.

Palantir’s Karp was even more bullish on what companies like his could deliver. “Technology should be able to reduce drastically the amount of collateral damage, civilian deaths,” he told the conference. “How are we going to reduce civilian deaths to the smallest amount humanly possible?… That is a tech problem.”

Schmidt was more guarded. “There’s reasons to be optimistic that the technology, when well executed…will ultimately [result] in both less war and also less civilian casualties,” he said. “You’re going to have to come up with some rules.

“Today, the law basically says that a human has to be in control,” Schmidt continued. (In truth, Pentagon policy is more nuanced). But, he argued, that gives significant leeway for autonomous weapons. For example, he said, a human could direct a hunter-killer to search a “bounded and precise area” for specific types of legally valid target, then let the algorithm decide when it had found a suitable enemy to destroy.

“That’s all okay legally,” Schmidt said. “I think that will work just fine — until war speeds up.” There are all sorts of circumstances, from stopping cyber attacks to intercepting incoming missiles, where human decisionmakers already struggle to react in time, and AI will likely make those timelines even tighter, he warned: “This compression of time is the one thing we don’t have good answers on.”

Indeed, one of the major attractions of AI for military commanders is not unmanned weapons, but automated battle planning systems to help them make well-informed decisions faster. “[The ability] to crunch massive amounts of data in software, faster, relatively, than your enemy…that’s going to be potentially decisive,” Milley said.

That need for speed will make it harder to take precautions against civilian casualties, as the Israelis have found out with their AIs.

Army leader dismisses House proposal for drone branch creation

“Operating and defending against the drone threat is something that will be experienced by, you know, all formations at multiple echelons,” said Army Undersecretary Gabe Camarillo.